A big challenge for online learning, especially in settings where learners access the content on-demand (Asynchronous learning), is to help learners monitor whether they are mastering the material while sustaining their engagement. Since the learning designers can’t speak directly to the students because of the nature of how on-demand online learning works, strategies must be implemented to make sure a student has the tools to identify gaps and misconceptions in their learning to ensure proper mastery; said challenge must be addressed at scale.

To help our students identify gaps and misconceptions in their learning, and reach mastery of content, we launched Platzi Quizzes: Short tests at the end of modules that course designers could incorporate in any course where concept checking or application of recently learned concepts could be assessed.

Quizzes, originally launched for the mobile app, were quickly adopted on all devices to ensure the best experience for learners.

Some curious facts about quizzes:

- Voice and audio were available in the app to help students learning English as a second language practice pronunciation.

- The question format included images and text.

- The test results would not affect the student’s progress in the course or final results. The aim was to keep it low-stakes to ensure they had the opportunity to monitor their own understanding in a low-stress environment.

While the feature was simple, the impact on our quality metrics was truly positive. Courses with Quizzes had higher completion rates, students had better performance in the course exam, and the efficiency of the course was also significantly higher.

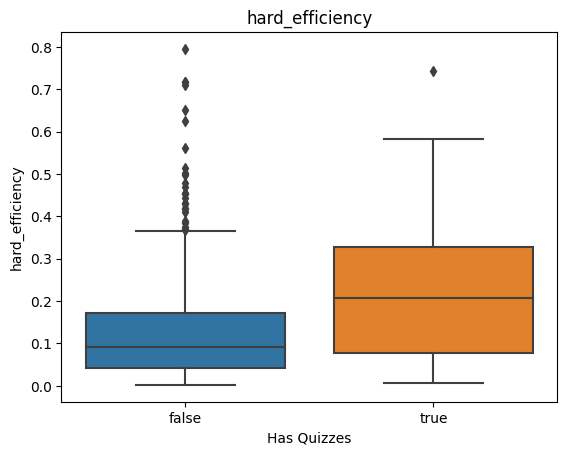

For example, a strong metric to know if the course is effective in helping students understand concepts is what I called Hard Efficiency, defined as the percentage of students that pass the test as a rate of the total number of students enrolled in a course. Courses with quizzes had a stronger Hard Efficiency than courses without quizzes:

The median Hard efficiency for courses with quizzes was 21%, compared to 9% in courses without the feature.

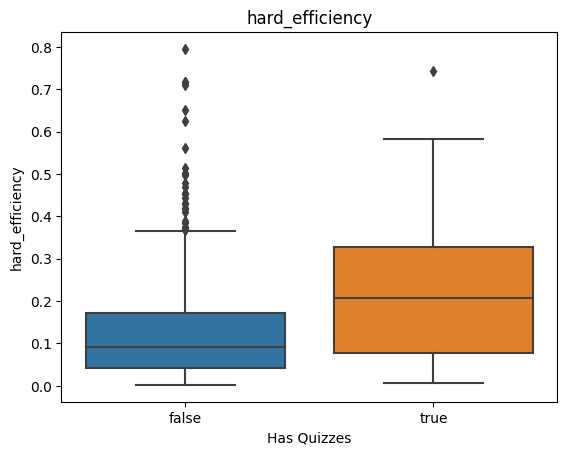

But that metric was not the only quality indicator positively impacted, for example, the completion rate for courses with a quiz was also significantly higher:

The completion rate is a key indicator because students who complete a course remain active for longer and have higher chances of subscription renewal, so improving completion rates in courses is directly linked to improving business outcomes. This feature successfully addressed that need.

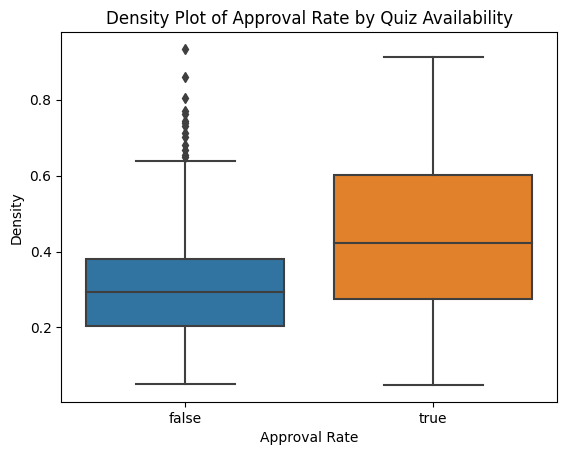

Also, the approval rate, defined as the percentage of students that pass the course exam on their first attempt was higher for courses where Quizzes were available because students had more opportunities to reinforce their learning through retrieval and practice of the learning content:

While 30% of students pass the course exam on the first attempt for courses without quizzes, courses with quizzes observed an Approval Rate of 44%. Indicating stronger mastery of content at the moment of the final exam.

To know if Quizzes were positively impacting course performance, we needed a metric that could help us validate whether our feature was indeed impacting the quality metrics. To conduct such validation we determined that the rate of students attempting the final exam for a second time needed to decrease in courses with quizzes if they were truly helping students learn more effectively.

I was very excited to confirm that, just as predicted, courses without quizzes had a Final Exam Retake Rate of 52%, meaning half of the students who tried the test for the first time tried a second time, compared to 46% for courses with a Quiz. The reduced retake rate confirms that fewer students are doing a second attempt but they were completing the course more, and certifying course mastery on the first attempt .

Curiously enough, the survey to assess the quality of a course was not affected by quizzes, showing that students would not state they liked to course more, even though they learned more effectively. The median Course Review Score was 4.7/5 for both courses with and without quizzes.

That was very relevant and surprising because we discovered that students don’t base their course review on what helps them learn better but on other, more subjective factors. This means that only basing product learning decisions on students’ reviews and NPS might not help ed-tech teams steer their product development toward more effective learning technologies.

My experience as a Learning Designer, Researcher, and Product Manager has led me to conclude that the development of educational products must be guided by a deep understanding of cognitive and pedagogical theories. This is the main goal of the learning product team at Platzi, the development of learning technologies that address our students’ needs and interests, guided by the business goals, but always starting with a scientific understanding of what learning is and how to help people learn effectively.

Comments (0)

Leave a comment

No comments yet. Be the first to share your thoughts!