An exam is only valuable if it is part of the learning experience. To merely use tests as a means to discriminate between good/bad students is to waste an opportunity to promote growth mindset, deeper understanding, and the chance for a learner to take control of their own progress.

Platzi’s exams, at that time, did not enable learners to effectively learn from their own mistakes. This was a low-hanging fruit we could use to help students more easily understand what mistakes they made, and what content they needed to reinforce to make sure any knowledge gaps were addressed. If corrected, Platzi’s exams could take advantage of research-based methods to improve retention of content.

In Platzi, 90% of questions in an exam have to be answered correctly to pass the test. All students have the opportunity to try again as many times as they want; the only condition is to wait 6 hours between test attempts. The reason is to avoid students from passing tests through trial and error.

The problem was that 80% of students would fail the first attempt, but less than 30% of those who failed the test would attempt a second time. Failing a test was also a predictor of inactivity; students who failed a test were more likely to stop learning, increasing their likelihood of churn.

When interviewed, students complained about the courses’ exams, but also confirmed an intuition I had: they would use the exam as a guide and, in a new tab, open the classes that covered the question they answered incorrectly. This process was tedious and frustrating — it required multiple attempts to find the correct class. Naturally, many students would drop out, never complete the test, and therefore not get certified.

We redesigned the exams in 3 main ways:

1. "Review your answers" before submitting

Students could revise and change answers before sending the test.

2. After submitting, students could review the exact class that addressed their incorrect question

This connected errors to direct learning guidance.

3. The suggested class opened in a new tab

So progress and feedback remained visible.

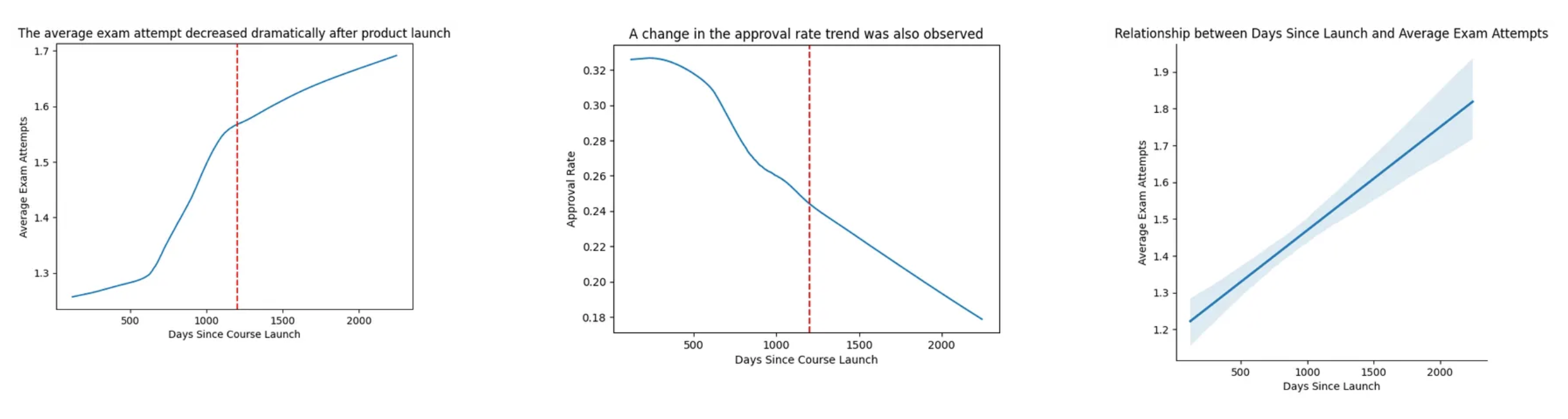

With these 3 changes, we significantly reduced the average exam attempts (the number of times a course test is taken before approval), increasing certification rates and helping sustain student activation.

The red line in both plots marks the launch of the feature.

Overall, the trend in exam attempts reduced dramatically while the number of students taking tests increased — meaning this improvement held even under growth and scale.

This feature was deemed successful, and while it might seem simple to add feedback in tests and quizzes, a strong effort from the learning design team was required: every question in every exam across Platzi needed to be linked to a specific class. More than 1,000 exams were mapped. Coordinating the learning team to do this was challenging but they were genuinely excited about the pedagogical impact.

On a personal note, this became one of the first clear examples of product, engineering, and learning teams collaborating to improve learning outcomes at scale.

Comments (0)

Leave a comment

No comments yet. Be the first to share your thoughts!